After the presidential debate in early September, followed by Taylor Swift’s endorsement of Kamala Harris, many viewers have brought attention to the deepfake of Swift endorsing Donald Trump– which was then reposted by the former president. Upon hearing this news, many citizens were alarmed by the realistic nature of deepfakes, and how easily they might be used to spread misinformation.

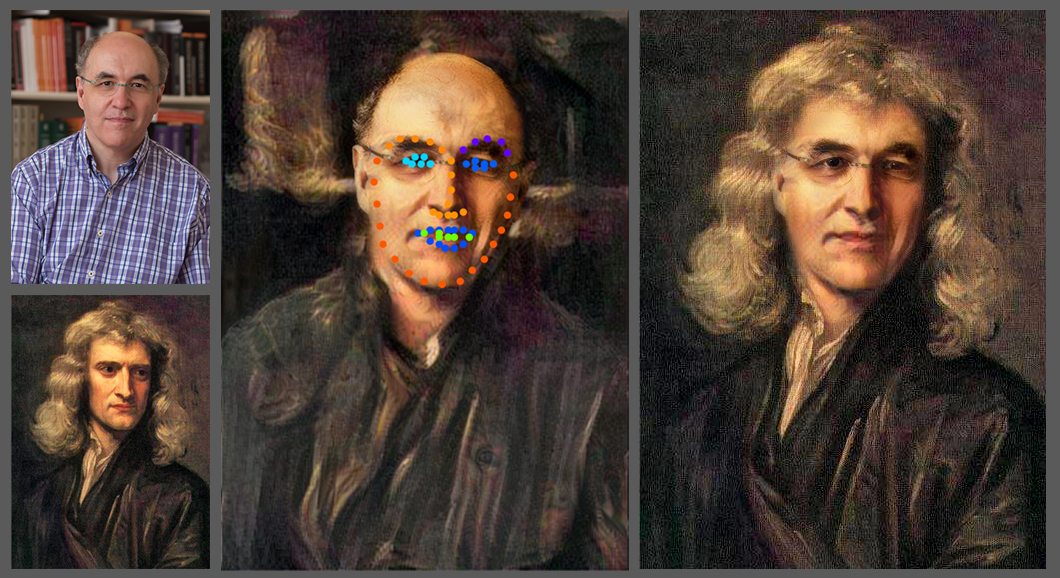

By definition, deepfakes are videos or images of someone that were altered using AI in order to make them appear like someone else. The U.S. Department of Homeland Security (DHS) defines it as: “an emergent type of threat falling under the greater and more pervasive umbrella of synthetic media… [deepfakes] create believable, realistic videos, pictures, audio, and texts of events which never happened.” Though deepfakes have been present since at least 2017, the escalating development of AI results in the faster production, easier access, and more realistic nature of deepfakes.

The DHS continues on to claim that the biggest threat of deepfakes is that they, “could be used to simulate or alter a specific individual or the representation of that individual.” An example the DHS provides is of a deepfake video of an Israeli actress engaging in sexual acts in 2017.

Though the example posed earlier on is related directly to politics, many deepfakes are used to produce inappropriate images– which then serve as potential blackmail.

In South Korea, new “Nth Rooms” have been resurfacing on headline news due to the new incorporation of AI. Currently, over 400 schools across the country have their own large Telegram chatroom in which they send in pictures of their female friends, classmates, and sometimes even teachers. Other students, or often an automated bot, will then utilize AI to produce inappropriate deepfakes derived from those pictures.

Female students in South Korea have reported constantly feeling anxious and overwhelmed, because they have no way of knowing if there are deepfakes of them spreading, or whether or not their male classmates have seen them.

Similar cases have been reported in the United States as well. In Washington, a male student at Issaquah High School used a “nudification app” on pictures from homecoming to edit and spread pictures of his female classmates, according to the New York Times.

In some schools, teachers and students alike refuse to take yearbook pictures, due to the fear that those pictures will then be submitted to produce non-consensual inappropriate pictures.

Statistics reveal that roughly 90% of victims of deepfakes are females. According to Glamour, “99% of those targeted by deepfake pornography are women.” The chat rooms in South Korean schools reveal that victims can be as young as elementary schoolers, not only adults.

As we enter a new era, where AI is developing and being improved immensely, the potential for exploitation grows in parallel. Schools must now worry about plagiarism, programmers are increasingly concerned about job security, and women must be careful about their media presence as the potential for deepfakes increases.